Seminars @ Biostatistics, UCPH

Upcoming seminars

Thursday, December 04, 15:00

We introduce NeuralSurv, the first deep survival model to incorporate Bayesian uncertainty quantification. Our non-parametric, architecture-agnostic framework captures time-varying covariate-risk relationships in continuous time via a novel two-stage data-augmentation scheme, for which we establish theoretical guarantees. For eNicient posterior inference, we introduce a mean-field variational algorithm with coordinate-ascent updates that scale linearly in model size. By locally linearizing the Bayesian neural network, we obtain full conjugacy and derive all coordinate updates in closed form. In experiments, NeuralSurv delivers superior calibration compared to state-of-the-art deep survival models, while matching or exceeding their discriminative performance across both synthetic benchmarks and real-world datasets. Our results demonstrate the value of Bayesian principles in data-scarce regimes by enhancing model calibration and providing robust, well-calibrated uncertainty estimates for the survival function.

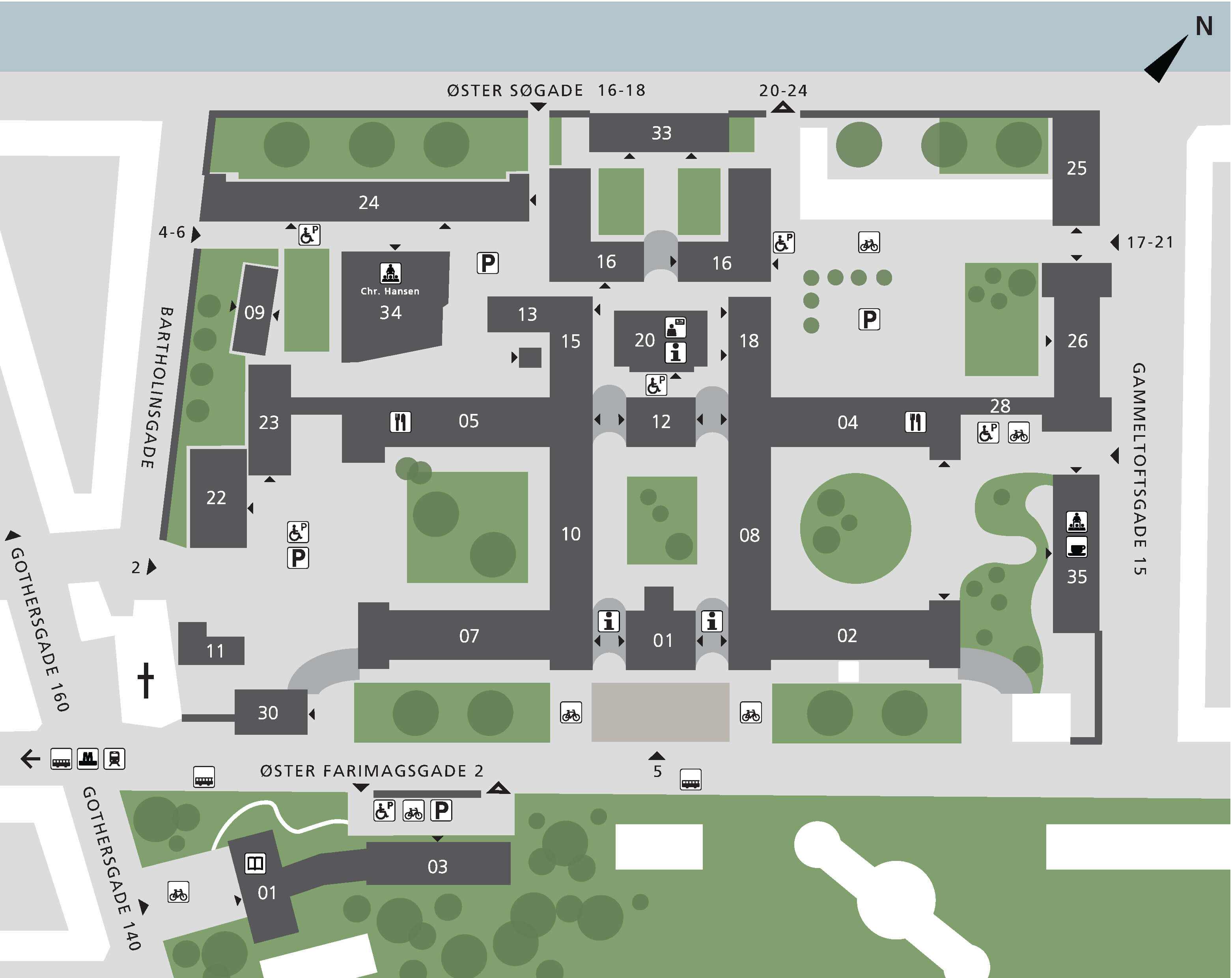

Map of CSS

You can find CSS next to the Botanical Garden, 5 minutes from Nørreport station.

Meeting room 5.2.46 is the library of the Biostatistics section, located in building 5, 2nd floor, room 46. See the map below for directions inside CSS.