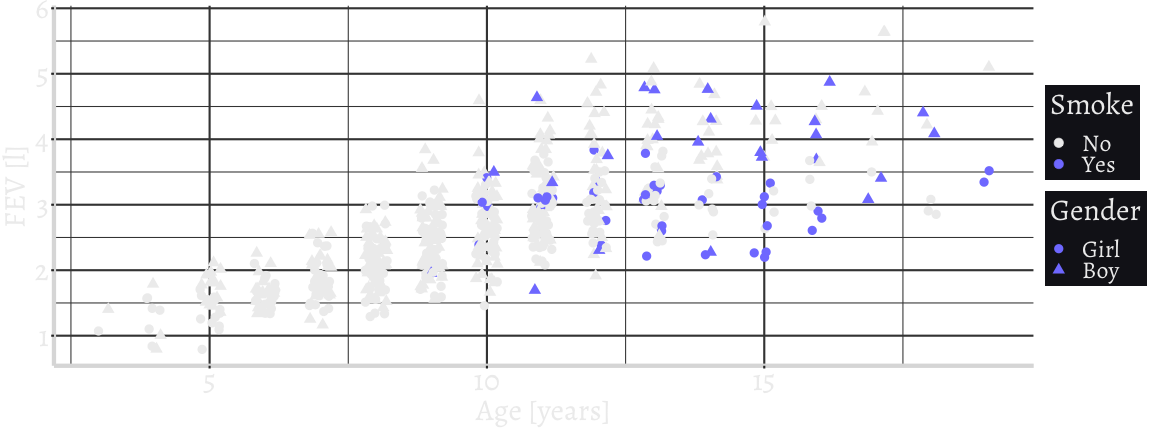

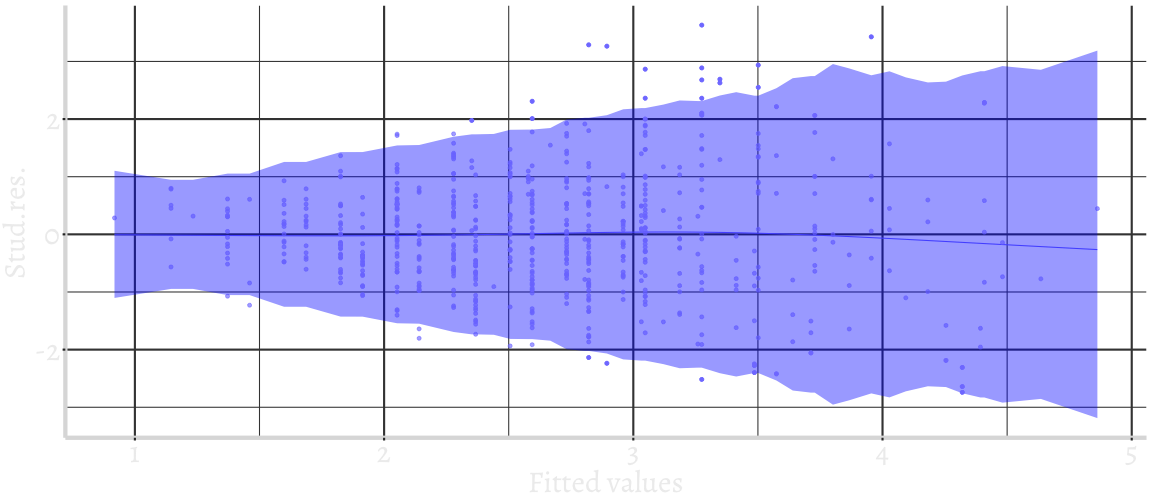

class: center, middle, inverse, title-slide # Validation of visual inference methods in statistics by use of DL ### Claus Thorn Ekstrøm and Anne Helby Petersen<br>UCPH Biostatistics<br>.small[<a href="mailto:ekstrom@sund.ku.dk" class="email">ekstrom@sund.ku.dk</a> ] ### <svg aria-hidden="true" role="img" viewBox="0 0 512 512" style="height:1em;width:1em;vertical-align:-0.125em;margin-left:auto;margin-right:auto;font-size:inherit;fill:steelblue;overflow:visible;position:relative;"><path d="M459.37 151.716c.325 4.548.325 9.097.325 13.645 0 138.72-105.583 298.558-298.558 298.558-59.452 0-114.68-17.219-161.137-47.106 8.447.974 16.568 1.299 25.34 1.299 49.055 0 94.213-16.568 130.274-44.832-46.132-.975-84.792-31.188-98.112-72.772 6.498.974 12.995 1.624 19.818 1.624 9.421 0 18.843-1.3 27.614-3.573-48.081-9.747-84.143-51.98-84.143-102.985v-1.299c13.969 7.797 30.214 12.67 47.431 13.319-28.264-18.843-46.781-51.005-46.781-87.391 0-19.492 5.197-37.36 14.294-52.954 51.655 63.675 129.3 105.258 216.365 109.807-1.624-7.797-2.599-15.918-2.599-24.04 0-57.828 46.782-104.934 104.934-104.934 30.213 0 57.502 12.67 76.67 33.137 23.715-4.548 46.456-13.32 66.599-25.34-7.798 24.366-24.366 44.833-46.132 57.827 21.117-2.273 41.584-8.122 60.426-16.243-14.292 20.791-32.161 39.308-52.628 54.253z"/></svg> <span class="citation">@ClausEkstrom</span> <br> .small[Slides: <a href="https://www.biostatistics.dk/talks/">biostatistics.dk/talks/</a>] --- class: animated, fadeIn layout: true --- class: inverse, middle, center # *"You must illustrate before you calculate"* ??? Model rimelig? Linearitet? --- # Model validation `$$FEV = f(\text{Smoke}, \text{Age}, \text{Sex}) + \varepsilon$$` <!-- --> --- # What are we looking for? <!-- --> .caption-right-vertical[residual_plot() function from MESS package.] --- background-image: url(pics/wally.png) background-size: 20% # Wally-plots / lineup's .caption-right-vertical[wallyplot() function from MESS package.] --- class: inverse, center <br><br><br><br> # Why? -- What is the best method to teach / use? --- # Visual validation inference (MTurk) .pull-left[ .small[ * design choices, comparing differences in distributions, variable selection, ... Potential problems: * Difficult to obtain sample from right population * Limited geographical reach / tradition * Can people be *trained* to do it? ] ] .pull-right[ <img src="pics/turk.jpeg" width="2560" /> ] .caption-right-vertical[Image from Wikipedia] --- # General idea *How to validate statistical plots for binary decision?* Train a deep neural network (DNN) to make classify/decision. * If DNN *cannot* be trained to classify the plots well, then it is unlikely that humans will be able to do so * If DNN *can* be trained then the plot contains relevant information. Necessary but not sufficient condition for validation criterion Complement to human subject experiment. --- # Example Let `\(f_0\)` and `\(f_\text{alt}\)` be DPG for two situations. `$$f_0(X, \varepsilon) = X + \varepsilon$$` `$$f_\text{alt}(X, \varepsilon) = \exp(X) + \varepsilon$$` and plotting function `$$g_\text{scatter}(x, y) = \text{draw scatter plot, annotate with LS-line}$$` `\(X\)` and `\(\varepsilon\)` are both `\({\cal N}(0,1)\)`. --- background-image: url(pics/fig1.png) background-size: 90% --- # Validity of binary visual inference methods Consider two DGP `\(f_0\)` and `\(f_\text{alt}\)`. Can `\(g\)` distinguish between them? 1. Does `\(g\)` convey enough information about `\(f_0\)` and `\(f_\text{alt}\)` to make it technically possible to tell them apart? <br> If yes: `\(g\)` is *technically valid* for distinguishing between `\(f_0\)` and `\(f_{alt}\)`. 2. Is it feasible to train human inspectors to be able to tell examples of `\(g_0\)` and `\(g_\text{alt}\)` apart?<br> If yes: `\(g\)` is *practically valid* for distinguishing between `\(f_0\)` and `\(f_\text{alt}\)`. Clearly depends on distance between `\(f_0\)` and `\(f_\text{alt}\)`. --- # Validity of binary visual inference methods Consider two DGP `\(f_0\)` and `\(f_\text{alt}\)`. Can `\(g\)` distinguish between them? 1. .yellow[Does `\(g\)` convey enough information about `\(f_0\)` and `\(f_\text{alt}\)` to make it technically possible to tell them apart? <br> If yes: `\(g\)` is *technically valid* for distinguishing between `\(f_0\)` and `\(f_{alt}\)`.] 2. Is it feasible to train human inspectors to be able to tell examples of `\(g_0\)` and `\(g_\text{alt}\)` apart?<br> If yes: `\(g\)` is *practically valid* for distinguishing between `\(f_0\)` and `\(f_\text{alt}\)`. Clearly depends on distance between `\(f_0\)` and `\(f_\text{alt}\)`. --- # Measuring the technical validity 1. Simulate plots `\(\rightarrow\)` 2. Train model `\(\rightarrow\)` 3. Score model <img src="pics/train.png" width="2560" height="400" /> --- # Simulate plots Let `\(n\)` be sample size for each plot. `\(M\)` is the number of plots (of each type), `\(r\)` proportion in training data. Create `\(M\)` pairs of plots `$$g_0^m = g(f_0^m)$$` and `$$g_\text{alt}^m = g(f_\text{alt}^m)$$` --- # Train model Construct classifier `\(h : g \rightarrow \{\text{null}, \text{alt}\}\)` from training data. Generic solution: `\(h\)` from deep convolutional neural network. Modified inception architecture (v3). Classify: 🐘, 🌱, 🚗, ☎️, 🏐, ... Classification error: 6.67% .caption-right-vertical[Szegedy et al. (2015). *Rethinking the Inception Architecture for Computer Vision*] --- # Technical validity score `$$TVS(g, f_0, f_\text{alt}, n, h) = \frac{1}{n_\text{val}} \sum_{i=1}^{n_\text{val}} \mathbb{1}(h(g_\text{val}^i) = Y_\text{val}^i)$$` To reduce sensitivity due to epochs: Use 80% percentile of TVS over all epochs. Must stress: Only compare TVS for similar protocols for construting `\(h\)`. --- # Example - linear regression Compare three plot functions * Scatter plot with LS line. * QQ-plot with added identity line. * Residual plot with horizontal line at 0. Plot software scales axes. 20 epochs fixed. 5000 plots, 10% test Four DGP: null, `\(A\)`, `\(B\)`, and `\(C\)`. `\(n \in \{5, 10, 20, 50\}\)`. `\(X \sim {\cal N}(0, \sigma^2)\)`, `\(\varepsilon \sim {\cal N}(0, 1)\)`, `\(\sigma^2 \in \{0.5, 1, 2\}\)`. ??? We will compute a TVS for each of these three plots in order to determine whether they are each useful for identifying model misspecification in linear normal regression. This will give us comparable numbers so that we can not only discuss whether each plot is valid for the task or not, but also which one is the most useful for identifying misspecification. --- background-image: url(pics/fig2.png) background-size: 70% --- background-image: url(pics/fig3.png) background-size: 78% --- background-image: url(pics/fig4.png) background-size: 93% # Calibration score - mechanic C, `\(\sigma^2=1\)` <br><br><br><br><br><br><br><br><br> Note: scatter plot, `\(n=5\)` --- # Summary .small[ Generic approach for comparing inferential aspects of plots for vis. val. * Can quantify and compare the potential information content * Don't rely on asymptotics or rules-of-thumb * Accuracy can be replaced in the TVS definition Caveats: * Binary decisions only * Identical in one way - differ in infinitely many ways * Useful for human investigators? Pinpoint problems ]